ILIAS:

Instance-Level Image retrieval At Scale

Giorgos Kordopatis-Zilos Vladan Stojnić Anna Manko Pavel Šuma Nikos Efthymiadis Nikolaos-Antonios Ypsilantis Zakaria Laskar Jiří Matas Ondřej Chum Giorgos Tolias

Visual Recognition Group, Faculty of Electrical Engineering, Czech Technical Univesity in Prague

Dataset intro

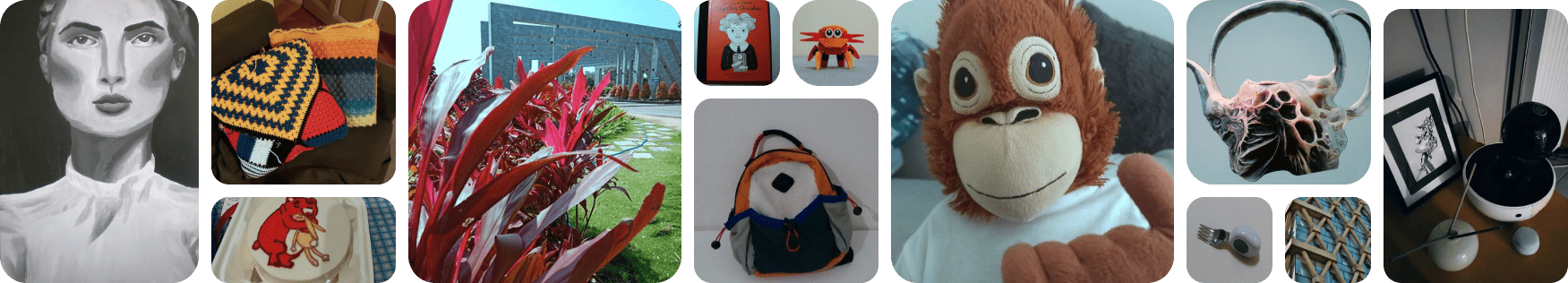

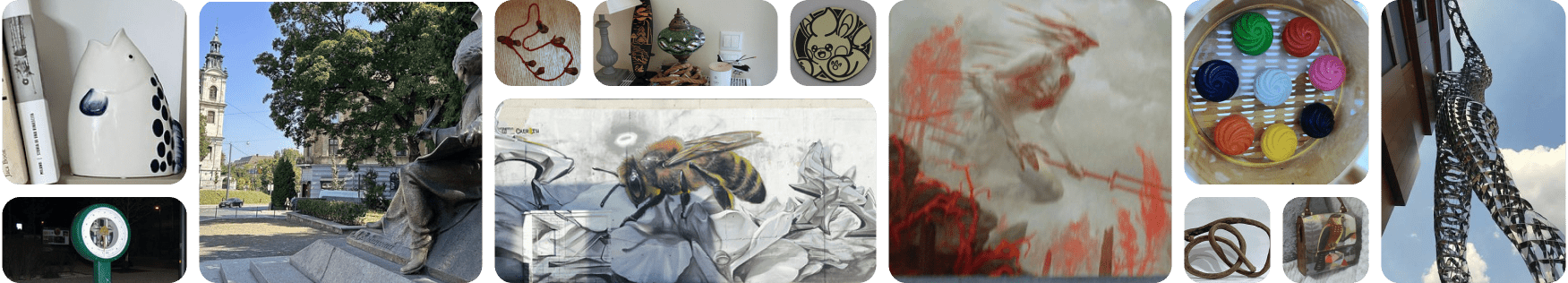

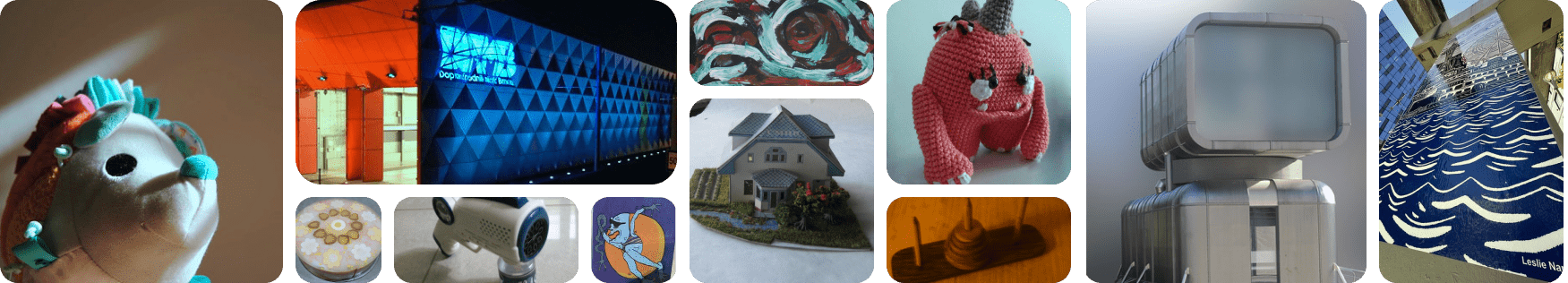

This work introduces ILIAS , a new test dataset for Instance-Level Image retrieval At Scale, designed to support future research in image-to-image and text-to-image retrieval for particular objects, and additionally serves as a large-scale benchmark for evaluating representations of foundation vision and language models (VLM).

ILIAS includes queries and positive images for 1,000 object instances, covering diverse conditions and domains. Retrieval is done against 100M distractor images from YFCC100M. To avoid FNs, only query objects emerging after 2014, the YFCC100M compilation date, are included.

Key insights from extensive benchmarking:

- models fine-tuned on specific domains, such as landmarks or products, excel in that domain but fail on ILIAS

- learning a linear adaptation layer using multi-domain class supervision results in performance improvements, especially for vision-and-language models

- local descriptors in retrieval re-ranking are still a key ingredient, especially in the presence of severe background clutter

- the text-to-image performance of the vision-language foundation models is surprisingly close to the corresponding image-to-image case.

Instances were manually collected to capture challenging conditions and diverse domains

Large-scale retrieval is conducted against 1M Distractors images from YFCC100M.

To avoid FPs all the objects known to be designed after 2014, the YFCC100M compilation date

SOTA models were evaluated during the dataset collection

Benchmark

Global representation models for Image-to-Image

Global representation models for Text-to-Image

Local representation models for Image-to-Image with Reranking

Explore the collected data for your instance-level research!

Discover ILIASGet in touch

Citation

If you find our project useful, please consider citing us:

@article{

#coming-soon,

title={ILIAS: Instance-Level Image retrieval At Scale},

author={},

journal={#coming-soon},

year={#coming-soon},

}

Results

Sumbit your results here:

If you have any further questions, please don't hesitate to reach out to georgekordopatis.gmail.com

Acknowledgment

We are grateful to everyone who contributed to ILIAS. A special thank you to Larysa Ivashechkina for her invaluable work in data annotation. We also appreciate the efforts and participation of all the external data collectors — Yankun Wu, Noa Garcia, Yannis Kalantidis, Dmytro Mishkin, Tomáš Jelínek, Dimitris Karageorgiou, Markos Zampoglou, Celeste Abreu, Aggeliki Tserota, Christina Tserota, Eleni Karantali, Eva Tsiliakou, Kelly Kordopati, Panagiotis Tassis, Ruslan Rozumnyi.